Apache Hadoop: Resolve “org.apache.hadoop.hdfs.server.namenode.SafeModeException”

The org.apache.hadoop.hdfs.server.namenode.SafeModeException exception is thrown when the NameNode is in safe mode. Safe mode is a read-only mode for the Hadoop Distributed File System (HDFS) cluster. In safe mode, the NameNode does not allow any modifications to the file system or blocks.

There are a few reasons why the NameNode might enter safe mode:

- The NameNode is starting up for the first time.

- The NameNode has been manually put into safe mode.

- The NameNode has detected a problem with the file system.

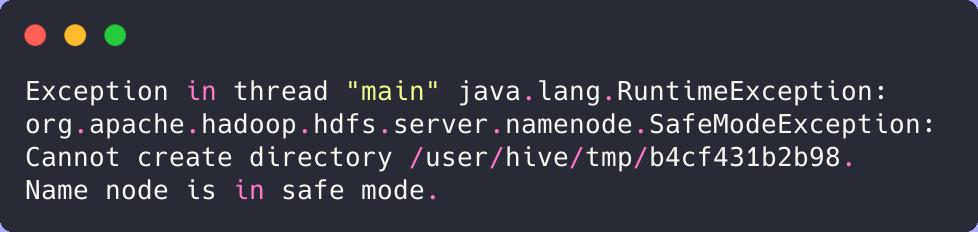

Here is an example of the error message that you might see:

How to resolve the exception

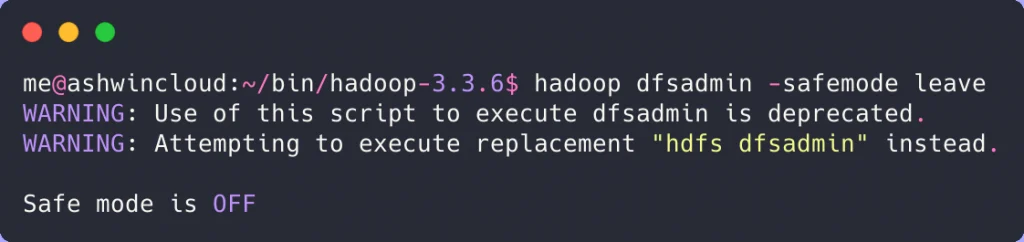

To resolve the org.apache.hadoop.hdfs.server.namenode.SafeModeException exception, you need to take the NameNode out of safe mode. You can do this by running the following command:

hadoop dfsadmin -safemode leave

Things to keep in mind

Here are some additional things to keep in mind:

- The NameNode will automatically leave safe mode once it has verified that the file system is healthy.

- You should not make any modifications to the file system while the NameNode is in safe mode.

Put the NameNode back in safe mode

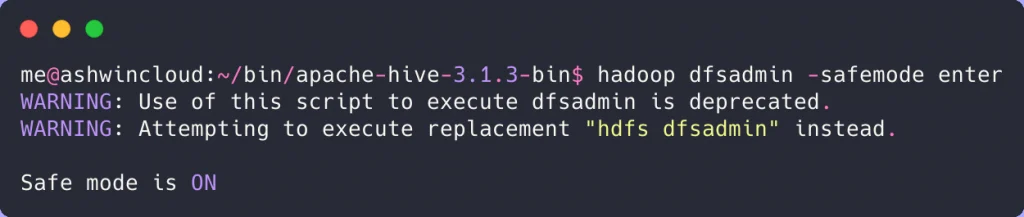

If you need to make modifications to the file system, you can put the NameNode into safe mode manually by running the following command:

hadoop dfsadmin -safemode enter

The org.apache.hadoop.hdfs.server.namenode.SafeModeException exception can be a frustrating problem, but it is usually easy to resolve. By following the steps outlined in this post, you should be able to get the NameNode out of safe mode and start using HDFS again.

Leave a Reply